For over 15 years, Waymo has researched AI and machine learning within autonomous driving. Today, ,Waymo published its latest research paper on an End-to-End Multimodal Model for Autonomous Driving (EMMA).

For over 15 years, Waymo has researched AI and machine learning within autonomous driving. Today, ,Waymo published its latest research paper on an End-to-End Multimodal Model for Autonomous Driving (EMMA).

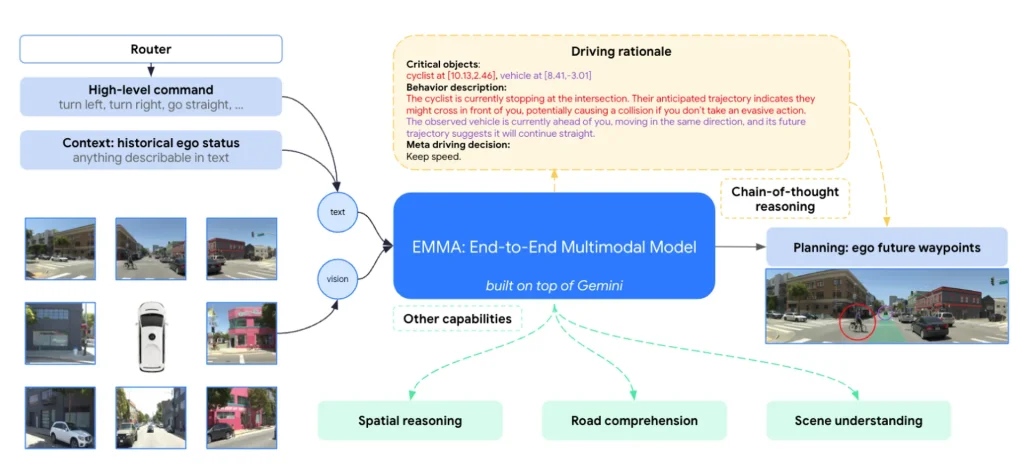

EMMA, powered by Gemini—a multimodal large language model from Google—uses a unified, end-to-end trained model to generate vehicle trajectories directly from sensor data. Developed and fine-tuned for autonomous driving, EMMA leverages Gemini’s vast world knowledge to better interpret complex on-road scenarios.

The research highlights the benefits of multimodal models, like Gemini, in autonomous driving and evaluates the advantages and limitations of the end-to-end approach. It emphasizes the value of incorporating multimodal world knowledge for tasks that demand spatial understanding and reasoning. EMMA shows positive task transfer across several essential driving tasks: jointly training it on trajectory prediction, object detection, and road graph understanding enhances performance compared to individual task models, suggesting potential for future scaled-up applications.

“EMMA is research that demonstrates the power and relevance of multimodal models for autonomous driving,” said Waymo VP and Head of Research Drago Anguelov. “We are excited to continue exploring how multimodal methods and components can contribute towards building an even more generalizable and adaptable driving stack.”

EMMA embodies the wider AI research goal of applying large-scale multimodal learning models to varied domains. Built on Gemini, EMMA is tailored for tasks like motion planning and 3D object detection in autonomous driving.

Features of EMMA:

- End-to-End Learning:** EMMA processes raw camera inputs and textual data to output driving actions such as planned trajectories, object detection, and road mapping.

- Unified Language Space:** It represents non-sensor inputs and outputs as natural language text, maximizing Gemini’s world knowledge.

- Chain-of-Thought Reasoning:** EMMA applies chain-of-thought reasoning, improving planning performance by 6.7% and enabling interpretable decision-making.

Co-trained across multiple tasks, EMMA achieves state-of-the-art or competitive results on trajectory prediction, camera-based 3D object detection, road graph estimation, and scene comprehension, performing comparably or better than models trained individually on each task.

One example shows EMMA navigating urban traffic and yielding to a dog on the road—an object it was not specifically trained to detect. The yellow line represents road graph estimation, while the green line depicts future trajectory planning.

Despite its promise, EMMA faces several challenges, such as its limited capacity to process long-term video sequences and lack of integration with LiDAR and radar, essential for robust 3D sensing. Improving simulation methods, optimizing model inference times, and ensuring safe decision-making remain focal areas.

EMMA showcases the benefits of multimodal techniques for enhancing autonomous vehicle system performance and generalizability. Waymo’s work demonstrates how cutting-edge AI applied to real-world challenges can expand AI’s role in dynamic, decision-intensive environments, potentially benefiting other fields requiring rapid, informed decision-making.

Waymo continues to explore how multimodal models can improve road safety and accessibility and invites those interested in AI’s impactful challenges to explore career opportunities with them.